Discover how PowerScale’s new RAG connectors optimize data ingestion, accelerating AI-driven insights and improving data handling efficiency.

In order to help clients free up CPU and GPU resources on their AI compute clusters, lower network and storage I/O load, and significantly speed up data processing time, Dell is launching an open-source Dell PowerScale-specific connector for RAG applications at GTC 2025.

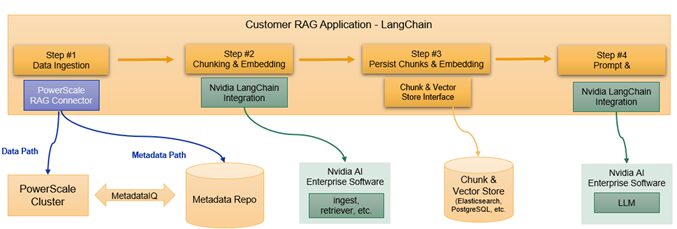

To further enhance data processing with NVIDIA NIM microservices, Dell clients can link the PowerScale RAG connection with RAG Frameworks such as LangChain or with the NVIDIA AI Enterprise software. These features connect with hardware and software services in the NVIDIA AI Data Platform reference architecture and allow scaling agentic AI deployments using Dell AI Data Platform with NVIDIA.

Why is this important?

Developers now face the difficulty of how to maintain the RAG application current with the most recent changes to the dataset when they build RAG applications and ingest data using their preferred RAG framework.

Usually, developers create a pipeline for data processing in which they import source data from a storage system, such as PowerScale, a crucial storage infrastructure option of the Dell AI Factory with NVIDIA, at predetermined intervals. The RAG application uses the chunks and embeddings that are created and updated by this pipeline. It is computationally costly and demands CPU and GPU resources to create chunks and embeddings.

The compute, network, and storage infrastructures are heavily taxed when the RAG program must ingest millions of documents and terabytes of data, particularly when the same documents appear more than once. By lowering the volume of data that must be processed, the PowerScale RAG Connector significantly boosts performance. More significantly, the connection intelligently determines which files require processing and which have already been handled. The connector’s integrations with generic Python classes, RAG frameworks like LangChain, NVIDIA NeMo Retriever, and NIM microservices are the best yet.

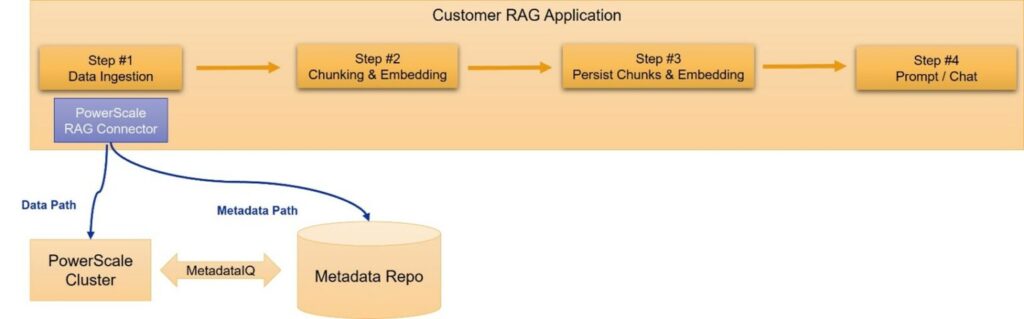

How does it operate?The new MetadataIQ feature in PowerScale’s most recent software release allows administrators to periodically save filesystem metadata to an external Elasticsearch database. To enhance data input and processing in RAG applications, the PowerScale RAG connector uses the database information to monitor the files that have already been processed.

In both diagrams

- Developers will ingest the data from PowerScale utilizing Dell open-source python-based RAG Connector.

- The Metadata Repo (database), which keeps track of newly created, edited, and updated files, will be contacted by the connection.

- Only newly created and altered files will be returned to the RAG framework by the RAG Connector after the database operation is finished; unmodified files will be skipped.

- The new and changed files will be processed normally using the RAG application. These files can be chunked and inserted using regular techniques or by exploiting NVIDIA NeMo Retriever.

- The NeMo Retriever suite of NIM microservices allows for the rapid and precise extraction, embedding, and reranking of insights from massive volumes of data.

In order to free up CPU, GPU, and network resources for other computational tasks, RAG applications only process new and modified files.

Customers will benefit from both Dell’s connector and NVIDIA’s best-in-class RAG capabilities when they use the Dell RAG PowerScale connector with NVIDIA AI Enterprise software, such as NeMo Retriever.

Key Themes Dell PowerScale RAG Connectors

Addressing the Data Ingestion Bottleneck in RAG

In order to greatly increase the efficiency of data ingestion and processing for Retrieval-Augmented Generation (RAG) applications, a new open-source connection for Dell PowerScale is being introduced. The difficulties developers encounter in updating RAG applications with the most recent information in their datasets are highlighted in the essay.

Intelligent Data Processing

The connector’s primary job is to intelligently recognize and handle only newly added or altered files in a PowerScale storage system. By doing this, a common hardship in conventional RAG pipelinesthe computationally costly reprocessing of unmodified datais avoided.

Integration with NVIDIA AI Enterprise and Current RAG Frameworks

The connector is made to work seamlessly with NVIDIA AI Enterprise software, including NVIDIA NIM microservices, and well-known RAG frameworks like LangChain. This enables customers to take advantage of the connector’s advantages within their current AI workflows and infrastructure.

Resource Optimisation

The connector seeks to lessen network and storage I/O burden, as well as free up critical CPU and GPU resources on AI compute clusters, by processing less data. As a result, RAG apps perform better overall and handle data more quickly.

Leveraging PowerScale’s MetadataIQ Feature

PowerScale’s new MetadataIQ feature powers the connector’s intelligent file tracking capabilities by periodically saving filesystem metadata to an external Elasticsearch database. To find new and altered files, the connection makes queries to this database.

Open-Source Availability

In order to promote adoption and possible community contributions, the Dell PowerScale RAG connection is being made available as an open-source project.