In this blog we discuss Qwen, Qwen2.5, and Qwen2.5 Coder-32B, the cutting-edge AI tool designed to revolutionize programming efficiency, to reach your full development potential.

Introduction Of Qwen

What is Qwen?

Alibaba Cloud has separately built a set of large language models (LLMs) called Qwen. Qwen can provide services and support in a variety of domains and jobs by comprehending and analyzing natural language inputs.

Who made Qwen?

Qwen, created by Alibaba Cloud, advances artificial intelligence (AI) to new heights, making it more intelligent and practical for computer vision, voice comprehension, and natural language processing.

What are the parameters of the Qwen model?

There are four parameter sizes available for the original Qwen model: 1.8B, 7B, 14B, and 72B.

Qwen2 Introduction

Many developers have constructed additional models on top of the Qwen2 language models in the three months after Qwen2 was released, giving us insightful input. Throughout this time, it have concentrated on developing increasingly intelligent and sophisticated language models. To present Qwen2.5, the newest member of the Qwen family.

- Dense, user-friendly, decoder-only language models that come in base and instruct variations and sizes of 0.5B, 1.5B, 3B, 7B, 14B, 32B, and 72B.

- learned using our most recent large-scale dataset, which contains up to 18 trillion tokens.

- notable gains in interpreting structured data (such as tables), producing structured outputs, particularly JSON, following instructions, and producing lengthy texts (more than 8K tokens).

- more adaptable to the variety of system prompts, improving chatbot condition-setting and role-play implementation.

- Context length is capable of producing up to 8K tokens and supporting up to 128K tokens.

- The more than 29 languages supported include Chinese, English, French, Spanish, Portuguese, German, Italian, Russian, Japanese, Korean, Vietnamese, Thai, Arabic, and others.

Qwen2.5 Documentation

Qwen2.5 the following sections make up to documentation:

- Quickstart: the fundamental applications and examples;

- Inference: the instructions for using transformers for inference, such as batch inference, streaming, etc.

- Execute Locally: the guidelines for using frameworks like as llama.cpp and Ollama to execute LLM locally on CPU and GPU;

- Deployment: the explanation of how to use frameworks like as vLLM, TGI, and others to deploy Qwen for large-scale inference;

- Quantization: the process of using GPTQ and AWQ to quantify LLMs and the instructions for creating high-quality quantized GGUF files;

- Training: the post-training guidelines, which include SFT and RLHF (TODO) with Axolotl, LLaMA-Factory, and other frameworks.

- Framework: using Qwen in conjunction with application frameworks, such as RAG, Agent, etc.

- Benchmark: the memory footprint and inference performance data (available for Qwen2.5).

Qwen2.5 Coder-32B: Overview

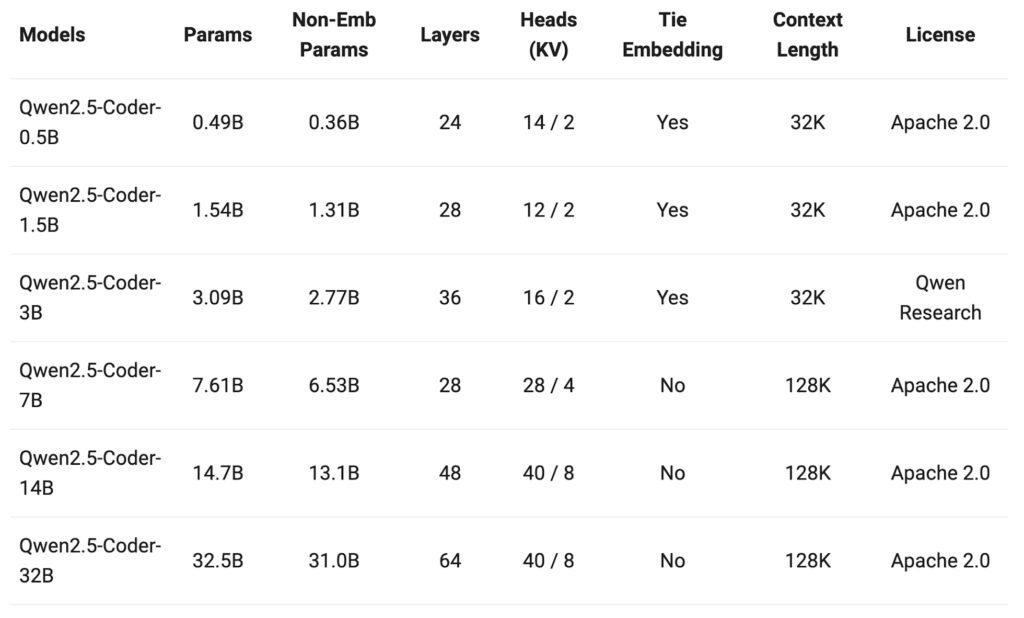

The most recent iteration of Code-Specific Qwen big language models, previously known as CodeQwen, is called Qwen2.5-Coder. To satisfy the demands of various developers, Qwen2.5 Coder has so far covered six popular model sizes: 0.5, 1.5, 3, 7, 14, 32 billion parameters. Compared to CodeQwen1.5, Qwen2.5 Coder offers the following enhancements:

- Notable advancements in the creation, reasoning, and correction of code. It scale up the training tokens to 5.5 trillion, including source code, text-code grounding, synthetic data, etc., based on the robust Qwen2.5. The most advanced open-source codeLLM at the moment is Qwen2.5 Coder-32B, which can code as well as GPT-4o.

- A more thorough basis for practical applications like Code Agents. improving its coding skills while preserving its overall competences and mathematical prowess.

- Extended-context Up to 128K tokens are supported.

The instruction-tuned 32B Qwen2.5-Coder model, which is included in this repository, has the following characteristics:

- Multiple programming languages.

- Training Phase: Pretraining and Posttraining Design: transformers with Attention QKV bias,

- RoPE, SwiGLU, and RMSNorm.

- There are 32.5 billion parameters.

- 31.0B is the number of non-embedding parameters.

- There are 64 layers.

- There are eight Attention Heads (GQA) for KV and forty for Q.

- Length of Context: Complete 131,072 tokens.

Code capabilities reaching state of the art for open-source models

Code creation, code reasoning, and code correcting have all seen notable advancements. The 32B model performs competitively with the GPT-4o from OpenAI.

Code Generation: The flagship model of this open-source version, Qwen2.5 Coder 32B Instruct, has outperformed other open-source models on many well-known code generation benchmarks (EvalPlus, LiveCodeBench, and BigCodeBench) and performs competitively with GPT-4o.

Code Repair: One crucial programming ability is code repair. Programming may be made more efficient by using Qwen2.5 Coder 32B Instruct to assist users correct problems in their code. With a score of 73.7, Qwen2.5 Coder 32B Instruct performed similarly to GPT-4o on Aider, a well used benchmark for code correction.

Code reasoning: The term “code reasoning” describes the model’s capacity to comprehend how code is executed and make precise predictions about its inputs and outputs. This 32B model improves upon the remarkable code reasoning performance of the newly published Qwen2.5 Coder 7B Instruct.

Multiple programming languages

All programming languages should be known to an intelligent programming helper. With a score of 65.9 on McEval, Qwen 2.5 Coder 32B excels in over 40 programming languages, with particularly strong performance in Haskell and Racket. During the pre-training stage, the Qwen team used their own special data balancing and cleaning techniques.

Furthermore, Qwen 2.5 Coder 32B Instruct’s multi-language code correction features continue to be excellent, helping users comprehend and alter programming languages they are already acquainted with while drastically lowering the learning curve for new languages. Like McEval, MdEval is a benchmark for multi-language code correction. Qwen 2.5 Coder 32B Instruct ranked top out of all open-source models with a score of 75.2.

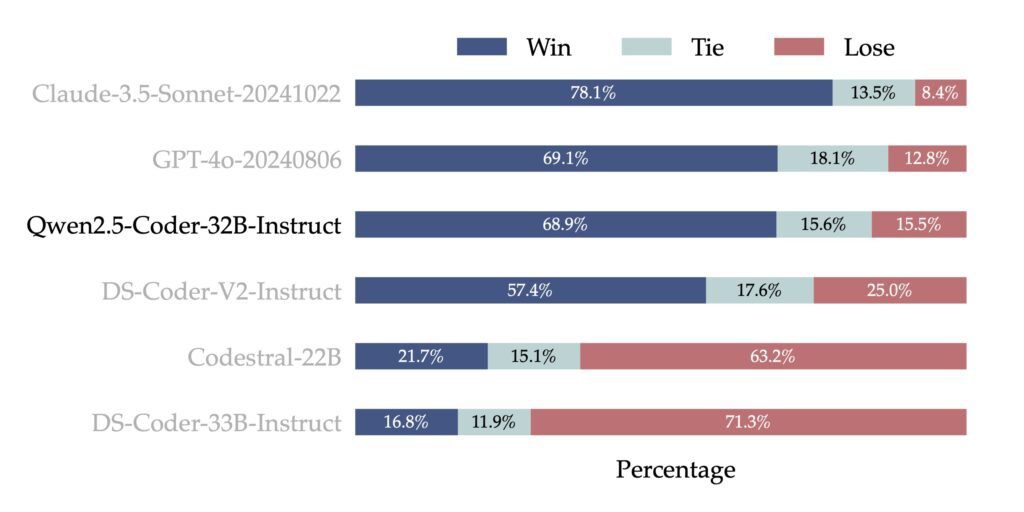

Human Preference

It created an internal annotated code preference assessment benchmark called Code Arena (which is comparable to Arena Hard) in order to assess how well Qwen 2.5 Coder 32B Instruct aligns with human preferences. Using the “A vs. B win” evaluation approach, which calculates the proportion of test set occurrences where model A’s score is higher than model B’s, it used GPT-4o as the assessment model for preference alignment. The benefits of Qwen 2.5 Coder 32B Instruct in preference alignment are shown by the findings below.